Vignesh Ravichandran • May 22, 2025

The Good, The Bad, and The "Drunk Junior Engineer": Real Feedback on Cursor from Engineering Leaders

Real-world Perspectives on One of Tech’s Hottest AI Coding Tools

At Rappo, our mission centers on connecting founders with engineering champions who provide invaluable insights and feedback. As part of our commitment to “dogfooding” our own platform, I regularly engage with these champions – senior engineers, directors, and VPs from startups to enterprises – about the tools shaping their daily workflows.

One tool that consistently emerges in these conversations is Cursor, the AI-powered code editor that’s rapidly gained popularity. Rather than rely on marketing claims or social media hype, I thought I’d share unfiltered feedback from the engineering leaders using it daily.

What Engineering Leaders Love About Cursor

The enthusiasm for Cursor is evident among many champions. One senior engineer was particularly positive: “Had really, really good success with Cursor in the last several months.” This sentiment echoes across organizations where engineers have embraced the tool for its ability to accelerate certain tasks.

Several champions highlighted Cursor’s particular strengths in specific scenarios:

- Writing unit tests (a traditionally tedious but necessary task)

- Handling migration tasks between frameworks or languages

- Providing smart code completion that feels natural

The Reality Check: “Like a Drunk Junior Engineer”

Perhaps the most insightful feedback came from a director overseeing nearly 70 engineers, where Cursor has achieved 70% daily adoption. Despite this impressive usage, their assessment was refreshingly nuanced:

“Feedback is mixed… some people think it’s like a drunk junior engineer. Other people find lots of benefit. Also depending on the task.”

That “drunk junior engineer” metaphor perfectly captures the current state of AI coding tools – sometimes brilliantly helpful, sometimes bewilderingly off-base. It’s an apt description that resonates with anyone who’s used these tools extensively.

The Technical Limitations Engineers Are Encountering

Behind the colorful metaphors are specific technical limitations that engineers consistently mentioned:

-

Context selection remains a manual process: “Still a fair degree of handholding… manually selecting the context information” – making the promise of seamless AI assistance feel incomplete

-

Control versus automation tension: One champion explicitly stated, “I never use auto model on cursor. I want to be in control,” highlighting how engineers still value oversight over pure automation

-

Integration challenges with existing workflows: Rather than replacing workflows, many engineers see Cursor as an additional layer that doesn’t always mesh perfectly with established processes

-

Need for customization: Several champions mentioned having to write extensive rules to get Cursor to work with their specific patterns and codebases

The Hybrid Approach Emerging in Engineering Teams

Perhaps the most interesting pattern across conversations was the hybrid usage model developing in engineering teams. As one champion explained: “We use Cursor for finishing the line they’re typing, then other tools for everything else.”

This pragmatic approach suggests that rather than using Cursor as an end-to-end solution, engineers are selectively applying it where it excels (autocomplete, simple code generation) while turning to other tools for more complex tasks requiring deeper context understanding.

What This Tells Us About the Future of AI Coding Tools

The feedback from our engineering champions reveals several important insights about Cursor and the broader landscape of AI coding tools:

-

Fundamentals matter most: Cursor’s success appears to stem from excelling at basic tasks rather than attempting to revolutionize the entire development workflow

-

Non-disruptive innovation wins: Engineers appreciate that Cursor enhances rather than disrupts their existing patterns

-

Specialized excellence beats generalized mediocrity: Tools that do specific things extremely well gain more traction than those trying to do everything adequately

-

The human-AI partnership is evolving: Rather than replacement, we’re seeing complementary relationships forming between developers and AI tools

Looking Forward

The mixed but generally positive feedback suggests we’re still in the early stages of AI coding tools. The “drunk junior engineer” metaphor is both a criticism and a remarkable achievement – creating something that can even approximate a junior engineer (albeit an occasionally confused one) represents significant progress.

The feedback also highlights substantial opportunities for innovation in areas Cursor doesn’t yet handle well: improved context understanding, complex refactoring, and better alignment with company-specific patterns and practices.

As AI tools continue to evolve, the most successful will likely be those that address these gaps while maintaining the strengths that have made tools like Cursor valuable additions to the modern engineering toolkit.

At Rappo, we connect founders with experienced engineering champions who provide invaluable insights for product development and technical decision-making. Join us to discover how these connections can accelerate your startup’s success.

More blogs for you

Rappo • Vignesh Ravichandran • May 29, 2025

Script Capital Backs Rappo to Tackle 0→1 GTM for Technical Founders

Rappo announces funding from Script Capital and shares its mission to connect technical founders with engineering champions.

Rappo • Vignesh Ravichandran • May 19, 2025

How Rappo Accelerated Sequin's Strategic Pivot to Postgres CDC

Learn how Rappo helped Sequin validate their strategic pivot to Postgres CDC through expert connections and technical feedback.

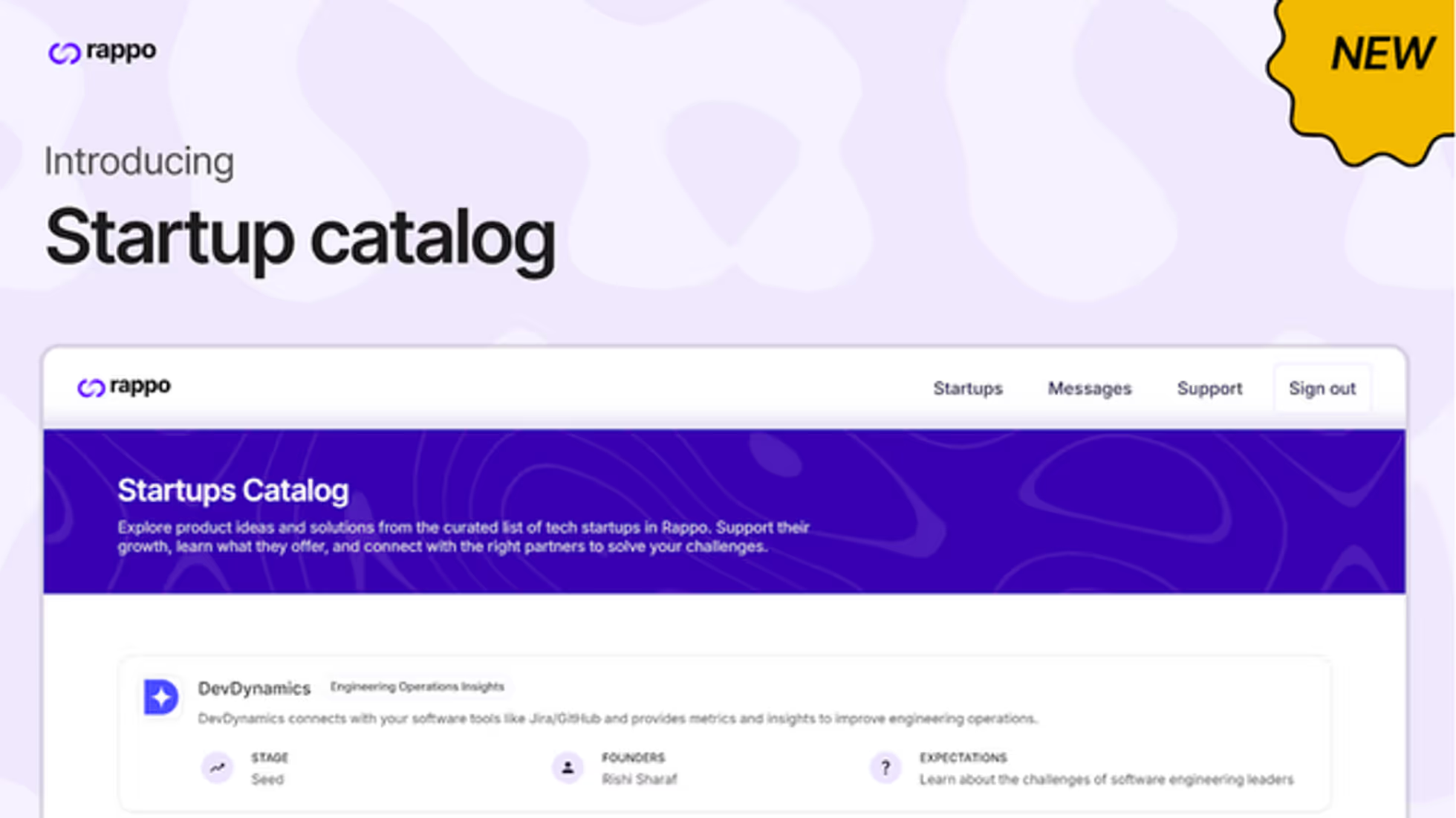

Rappo • Akshaya Sriram • Dec 17, 2024

Introducing Startups Catalog: Simplify Startups Discovery

We're excited to unveil the Startups Catalog — a powerful new feature designed to help you discover and connect with cutting-edge startups that are shaping the future of their industries.